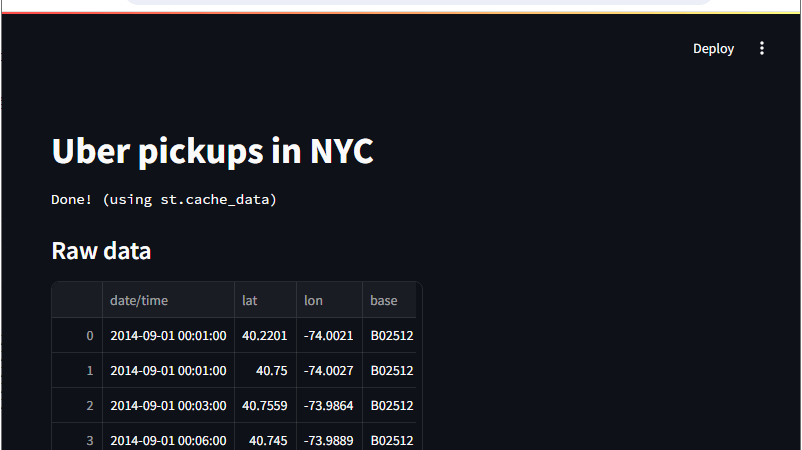

[PYTHON/STREAMLIT] @cache_data 데코레이터 : 함수에서 반환 데이터 캐시하기

■ @cache_data 데코레이터를 사용해 함수에서 반환 데이터를 캐시하는 방법을 보여준다. ▶ main.py

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

import streamlit as st import pandas as pd @st.cache_data def loadData(url, rowCount): dataFrame = pd.read_csv(url, nrows = rowCount) changeToLowerCaseString = lambda x: str(x).lower() dataFrame.rename(changeToLowerCaseString, axis = "columns", inplace = True) dataFrame["date/time"] = pd.to_datetime(dataFrame["date/time"]) return dataFrame st.title("Uber pickups in NYC") loadingDataText = st.text("Loading data...") dataFrame = loadData("https://s3-us-west-2.amazonaws.com/streamlit-demo-data/uber-raw-data-sep14.csv.gz", 10000) loadingDataText.text("Done! (using st.cache_data)") st.subheader("Raw data") st.write(dataFrame) |

▶ requirements.txt

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

altair==5.3.0 attrs==23.2.0 blinker==1.8.2 cachetools==5.3.3 certifi==2024.6.2 charset-normalizer==3.3.2 click==8.1.7 gitdb==4.0.11 GitPython==3.1.43 idna==3.7 Jinja2==3.1.4 jsonschema==4.22.0 jsonschema-specifications==2023.12.1 markdown-it-py==3.0.0 MarkupSafe==2.1.5 mdurl==0.1.2 numpy==2.0.0 packaging==24.1 pandas==2.2.2 pillow==10.3.0 protobuf==5.27.2 pyarrow==16.1.0 pydeck==0.9.1 Pygments==2.18.0 python-dateutil==2.9.0.post0 pytz==2024.1 referencing==0.35.1 requests==2.32.3 rich==13.7.1 rpds-py==0.18.1 six==1.16.0 smmap==5.0.1 streamlit==1.36.0 tenacity==8.4.2 toml==0.10.2 toolz==0.12.1 tornado==6.4.1 typing_extensions==4.12.2 tzdata==2024.1 urllib3==2.2.2 watchdog==4.0.1 |

※ pip install streamlit 명령을 실행했다.