[PYTHON/LANGCHAIN] 채팅 히스토리를 갖고 CHROMA 벡터 저장소 검색하기

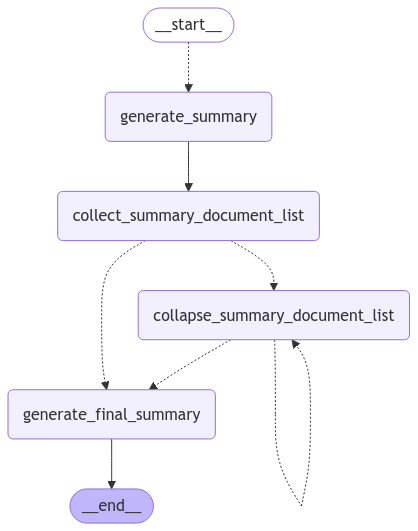

■ 채팅 히스토리를 갖고 CHROMA 벡터 저장소를 검색하는 방법을 보여준다. ※ OPENAI_API_KEY 환경 변수 값은 .env 파일에 정의한다. ▶ main.py

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 |

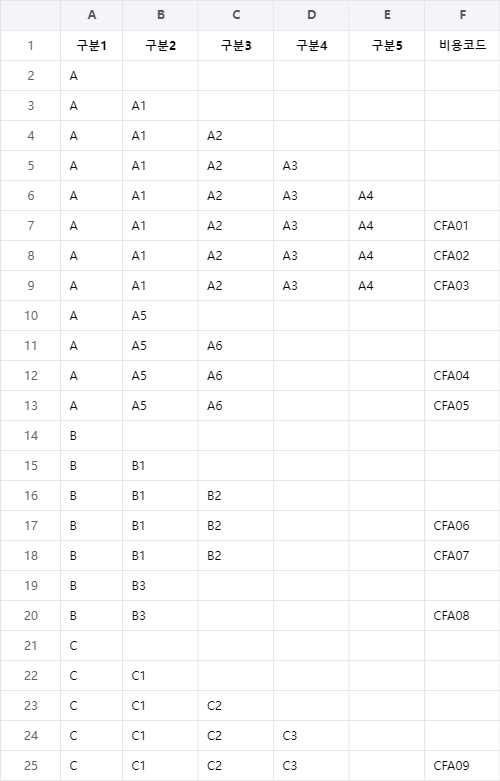

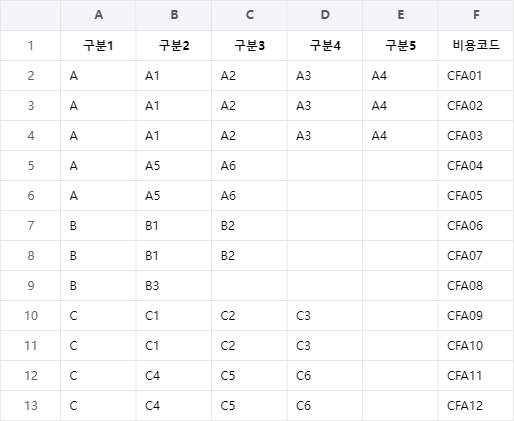

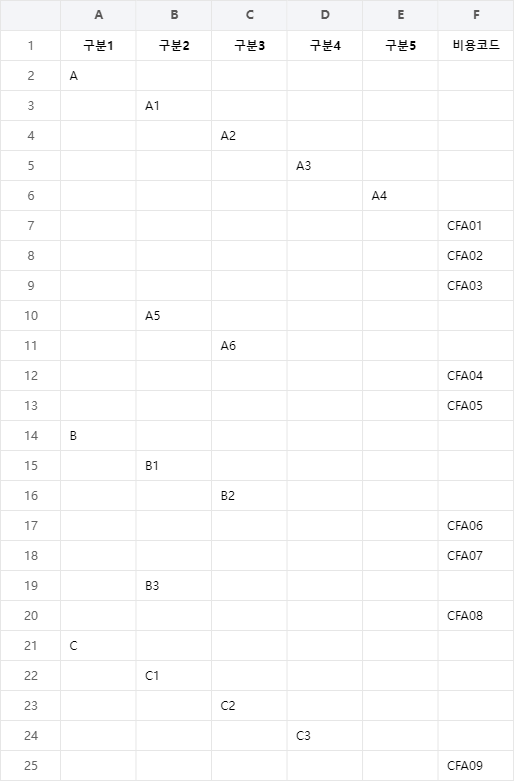

import bs4 from dotenv import load_dotenv from langchain_openai import ChatOpenAI from langchain_community.document_loaders import WebBaseLoader from langchain_text_splitters import RecursiveCharacterTextSplitter from langchain_chroma import Chroma from langchain_openai import OpenAIEmbeddings from langchain_core.prompts import ChatPromptTemplate from langchain_core.prompts import MessagesPlaceholder from langchain.chains import create_history_aware_retriever from langchain.chains.combine_documents import create_stuff_documents_chain from langchain.chains import create_retrieval_chain from langchain_core.chat_history import BaseChatMessageHistory from langchain_community.chat_message_histories import ChatMessageHistory from langchain_core.runnables.history import RunnableWithMessageHistory load_dotenv() chatOpenAI = ChatOpenAI(model = "gpt-4o") webBaseLoader = WebBaseLoader( web_paths = ("https://lilianweng.github.io/posts/2023-06-23-agent/",), bs_kwargs = dict(parse_only = bs4.SoupStrainer(class_ = ("post-content", "post-title", "post-header"))) ) documentList = webBaseLoader.load() recursiveCharacterTextSplitter = RecursiveCharacterTextSplitter(chunk_size = 1000, chunk_overlap = 200) splitDocumentList = recursiveCharacterTextSplitter.split_documents(documentList) openAIEmbeddings = OpenAIEmbeddings() chroma = Chroma.from_documents(documents = splitDocumentList, embedding = openAIEmbeddings) vectorStoreRetriever = chroma.as_retriever() systemMessage1 = "Given a chat history and the latest user question which might reference context in the chat history, formulate a standalone question which can be understood without the chat history. Do NOT answer the question, just reformulate it if needed and otherwise return it as is." chatPromptTemplate1 = ChatPromptTemplate.from_messages( [ ("system", systemMessage1), MessagesPlaceholder("chat_history"), ("human", "{input}") ] ) runnableBinding1 = create_history_aware_retriever(chatOpenAI, vectorStoreRetriever, chatPromptTemplate1) systemMessage2 = "You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, say that you don't know. Use three sentences maximum and keep the answer concise.\n\n{context}" chatPromptTemplate2 = ChatPromptTemplate.from_messages( [ ("system", systemMessage2), MessagesPlaceholder("chat_history"), ("human", "{input}"), ] ) runnableBinding2 = create_stuff_documents_chain(chatOpenAI, chatPromptTemplate2) runnableBinding3 = create_retrieval_chain(runnableBinding1, runnableBinding2) chatMessageHistoryDictionary = {} def GetChatMessageHistoryDictionary(session_id : str) -> BaseChatMessageHistory: if session_id not in chatMessageHistoryDictionary: chatMessageHistoryDictionary[session_id] = ChatMessageHistory() return chatMessageHistoryDictionary[session_id] runnableWithMessageHistory = RunnableWithMessageHistory( runnableBinding3, GetChatMessageHistoryDictionary, input_messages_key = "input", history_messages_key = "chat_history", output_messages_key = "answer", ) responseDictionary1 = runnableWithMessageHistory.invoke( {"input" : "What is Task Decomposition?"}, config = {"configurable" : {"session_id" : "abc123"}} ) answer1 = responseDictionary1["answer"] print(answer1) print("-" * 50) """ Task Decomposition is a technique used to break down complex tasks into smaller, manageable steps. It is often implemented using methods like Chain of Thought (CoT) or Tree of Thoughts, which help in systematically exploring and reasoning through various possibilities. This approach enhances model performance by allowing a step-by-step analysis and execution of tasks. """ responseDictionary2 = runnableWithMessageHistory.invoke( {"input" : "What are common ways of doing it?"}, config = {"configurable" : {"session_id" : "abc123"}} ) answer2 = responseDictionary2["answer"] print(answer2) print("-" * 50) from langchain_core.messages import AIMessage for message in chatMessageHistoryDictionary["abc123"].messages: if isinstance(message, AIMessage): prefix = "AI" else: prefix = "User" print(f"{prefix} : {message.content}") print("-" * 50) """ Task decomposition is the process of breaking down a complex task into smaller, more manageable steps or subgoals. This approach, often used in conjunction with techniques like Chain of Thought (CoT), helps enhance model performance by enabling step-by-step reasoning. It can be achieved through prompting, task-specific instructions, or human inputs. -------------------------------------------------- Common ways of performing task decomposition include using straightforward prompts like "Steps for XYZ.\n1." or "What are the subgoals for achieving XYZ?", employing task-specific instructions such as "Write a story outline" for writing a novel, and incorporating human inputs. -------------------------------------------------- User : What is Task Decomposition? AI : Task decomposition is the process of breaking down a complex task into smaller, more manageable steps or subgoals. This approach, often used in conjunction with techniques like Chain of Thought (CoT), helps enhance model performance by enabling step-by-step reasoning. It can be achieved through prompting, task-specific instructions, or human inputs. User : What are common ways of doing it? AI : Common ways of performing task decomposition include using straightforward prompts like "Steps for XYZ.\n1." or "What are the subgoals for achieving XYZ?", employing task-specific instructions such as "Write a story outline" for writing a novel, and incorporating human inputs. -------------------------------------------------- """ |

▶